Charged for Unauthenticated Requests on Azure Blob Storage – And What I Learn From It

Last April 2024, I had an unexpected and frustrating experience with Microsoft Azure that left us with a hefty bill for unauthenticated requests to our blob storage. This was a wake-up call. I wanted to share my story to help others avoid the same pitfalls and better understand how Azure’s pricing model works while explaining what happened in this situation.

The Incident

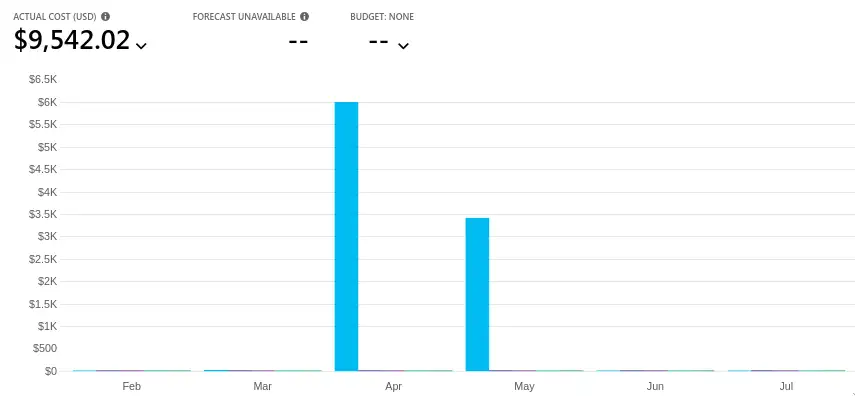

It all started when our team noticed a spike in our monthly Azure bill. After digging into the cost breakdown, I discovered that a significant portion of the charges were coming from a blob storage dedicated to persist logs from Grafana Loki. I was confused, we had the setup running close to 4 months straight and monthly cost was less than $5.

We first created a support ticket as soon we found this and continue our internal investigation on what happened, From the internal investigation we found out that one of the observability stack deployments on another Kubernetes cluster were failing. This cluster was on a different subscription, and we had intentions to promote this cluster to the production. When deploying the observability stack, specially Loki we did not want it to persist logs on the azure blob storage. We only did this as a test run, and we used the same helm chart to do the deployment same as in the development environment, but we used placeholder values for the azure blob storage config in Loki instead of actual values.

Once we encountered this we act quick and uninstalled all the helm charts related to observability thinking that might be the issue.

How Did This Happen?

As mentioned earlier, we deployed the existing observability stack on a different cluster as a test run. We used the same helm chart that we had and followed the same steps to deploy, but we replaced the storage values with placeholder values. Every other configuration was bound to

the cluster and we did not have any concerns doing so. But we missed on a single point, we did replace the account_key and the container_name, but we kept the storage account_name as it is.

# storage config for lokistorage_config: azure: # Your Azure storage account name account_name: <account-name> # For the account-key, see docs: https://docs.microsoft.com/en-us/azure/storage/common/storage-account-keys-manage?tabs=azure-portal account_key: <account-key> # See https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blobs-introduction#containers container_name: <container-name>And what ended up happening was Loki tried to flush chunks into the blob storage but failed. We expect this to happen, but did not know that azure charge for those requests. Because from what I understand it should be a 400 Bad Request. The container name is not something existing, it is just a placeholder. It literally said a_container_name.

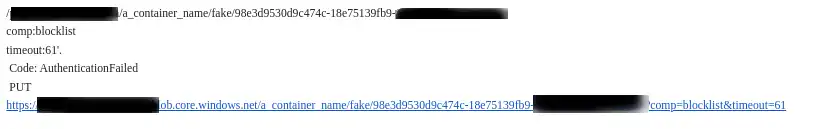

This is a screenshot from the azure support ticket findings. From this, what I understand was azure check the authentication on the service account level and try to validate the authenticity of the request before checking if the container is something existing. That make sense, but from what I understand, that’s a considerable issue. If someone just leak their service account name, there are done. Someone can spam the Azure REST API as much as they want till the company go bankrupt.

I only got one year of experience in the industry, but I assume azure must stop charging for unauthenticated requests or at least extend their authorization logic to check if the container name is legit.

It is not just Azure, AWS had the same issue, Someone face something similar there. Check this for his story. But AWS was kind enough to patch the issue.

Both AWS and Azure had it documented, but who expects that their service provider charges for unauthenticated requests, charging for unauthenticated requests mean anyone on the internet can ruin your project if they know the storage account name or the s3 bucket name if it is AWS.

https://github.com/grafana/loki/issues/8821

But after all, it is not just Azure, We found something wrong with how Grafana Loki behave. Azure’s policy to charge for unauthenticated request will not be a concern without this issue. Loki is implemented in a way it keeps trying to flush chunks even when it kept failing.

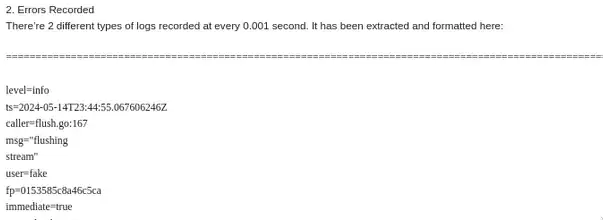

The two logs are a INFO log when Loki tries to flush and a ERROR log saying attempt failed and cannot flush the chunks. That repeated in an infinite loop for over 40 days.

The Cost Implications

Azure charges for both storage and operations, including requests to your blob storage. While the cost per request is relatively low, it can add up quickly when you’re dealing with thousands or even millions of requests. In my case, the unauthenticated requests accounted for a significant portion of the azure bill, and I was left scrambling to understand and resolve the issue.

This shows the cost before and after, it is not event visible on the chart, around 5 dollars a month.

What we Did to Fix It

What happened is unfortunate, we had the observability stack deployed to observe and monitor our infrastructure. But we did not have enough things setup to monitor the observability. So these are the setups that we followed right after the incident.

We did not have much to fix. All we did was removed all the deployment related to observability from the cluster. That solves the issued of the issue, as Loki is the culprit here 🙂.

We took the following measures after the incident,

- Reevaluate our Azure budgets

- Configured proper alerts across Azure

- Started to conduct regular audits (weekly, month end)

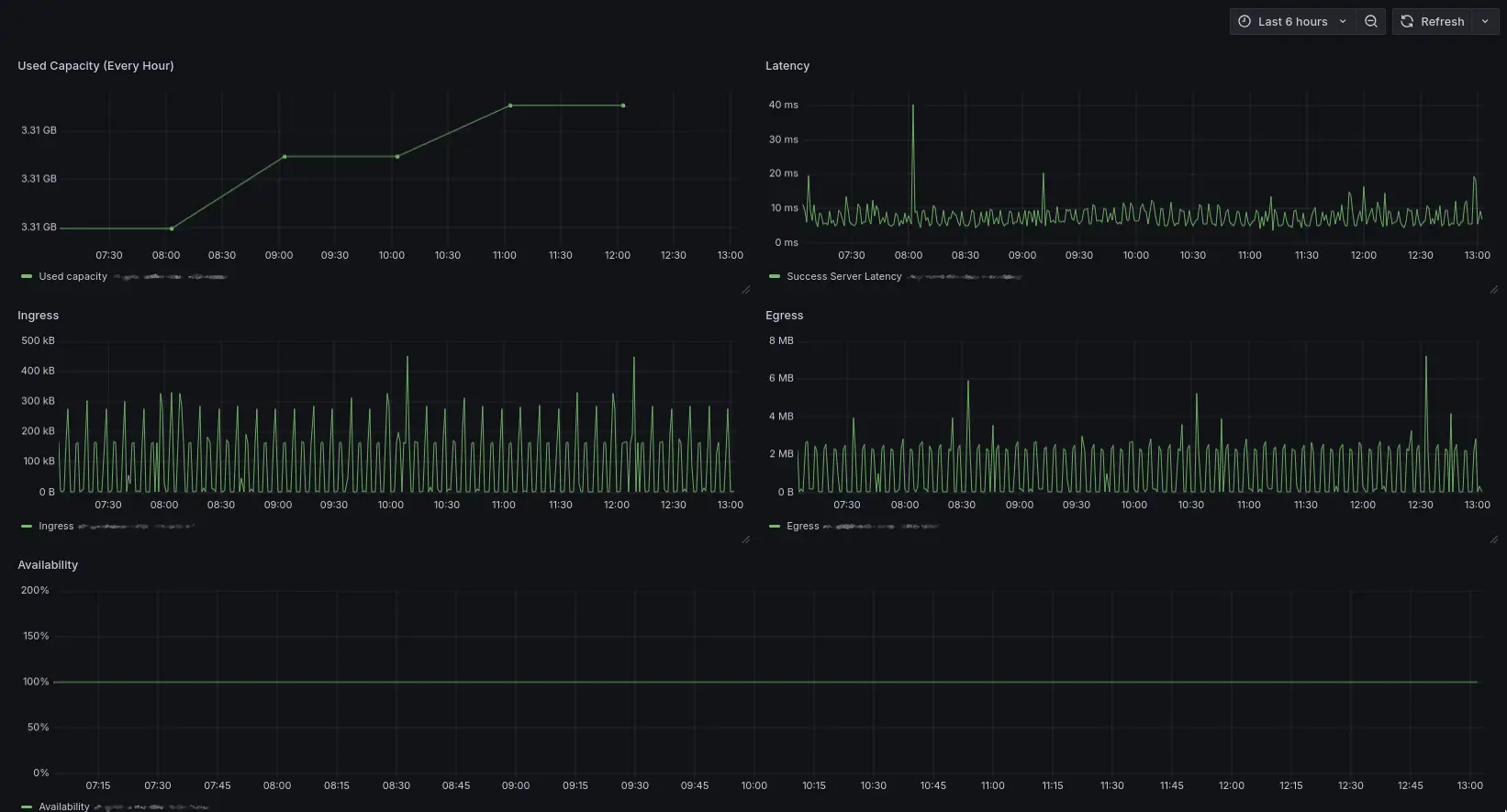

- Set up a Grafana dashboard for blob storage metrics using Azure Monitor.

Lessons Learned

The whole world around DevOps is not certain, Most devastating issues can be prevented if we pay enough attention to what we do. It is okay to assume how things might work, but do not depend on them all time. Try to find enough evidence to support what you’re trying to do, because most of the time you’re working on code written by someone else. You cannot expect how software will behave in different scenarios. Always try to spend enough time to do things right, a simple workaround might ruin all you have been doing.

Final Thoughts

While my experience with unauthenticated requests was frustrating, it was also a valuable learning opportunity. Cloud platforms offer incredible flexibility and scalability, but they also require careful management to avoid unexpected costs. By sharing my story, I hope to help others avoid similar issues and make the most of their Azure investments.

If you’ve had a similar experience or have additional tips for managing Azure costs, I’d love to hear from you in the comments below!